Accelerating and refining UX tests with AI and multi-agent systems.

As an experiment with Synthetic Personas shows, the value of AI lies not in replacing entire processes, but in solving specific bottlenecks.

Jan 21, 2026

Here at Taqtile, we view onboarding not just as an integration process but as an invitation to practical experimentation with new technologies. As a result of this process, Ysabella Andrade's experiment using Synthetic Personas for UX testing reinforced one of the most important aspects of using technology:

Artificial Intelligence solutions yield results when they start from specific bottlenecks, growing with each result achieved, and not through the automation of entire processes.

In Ysabella's approach, instead of trying to replace complete UX research, she focused on a critical stage: the intermediate refinement between the initial prototype and the first usability test. As a result, the product matures before going for testing with real people, bringing speed and value to the process, as the testing with real people can be leveraged for more strategic issues.

The article below details the process, covering the engineering of the agents used and the learnings about the limits and potentials of this approach for designers and PMs.

If you want to know more about the adoption of AI in large companies, check out Taqtile Radar, a report featuring key learnings and market data on technology adoption in 2025.

Synthetic Personas: Practical Learnings for UX Testing

By Ysabella Andrade.

Concept/Prompt: Ysabella Andrade.

What if you could test your product with dozens of "users" before recruiting a single real participant?

It seems contradictory, but it is exactly what synthetic personas with AI allow you to do.

During my onboarding at Taqtile, I faced a challenge: understanding how synthetic personas could optimize the refinement of the final design/product process before testing with real users.

Our goal was to use the onboarding space, a moment for understanding processes and conducting training, to test whether AI could advance hypotheses and identify usability failures/business rules before taking the product for real validation.

Below, I detail the process and key learnings:

1. The Scenario: the B2B logistics journey

For the prototype to make sense, it needs to solve a real problem and use real data. The product tested for our analysis was a B2B e-commerce platform focused on the distribution of consumer goods. This business aims to digitize the entire purchasing process through its official channels, especially the mobile app.

In this product, the "hard user" is the company representative who serves other businesses. We were not dealing with a common shopping cart but rather with a complex journey that needed to meet various logistical scenarios.

Thus, the challenge was to simplify this dense process without reducing informational content, maintaining the trust of both buyers and sellers. Since time was tight, we saw an opportunity to test synthetic personas to mature the prototype and understand the possibilities and limits of AI in UX research: will it replace real people? Does it generate valuable insights with reliable evidence? And how to structure a good test in this format?

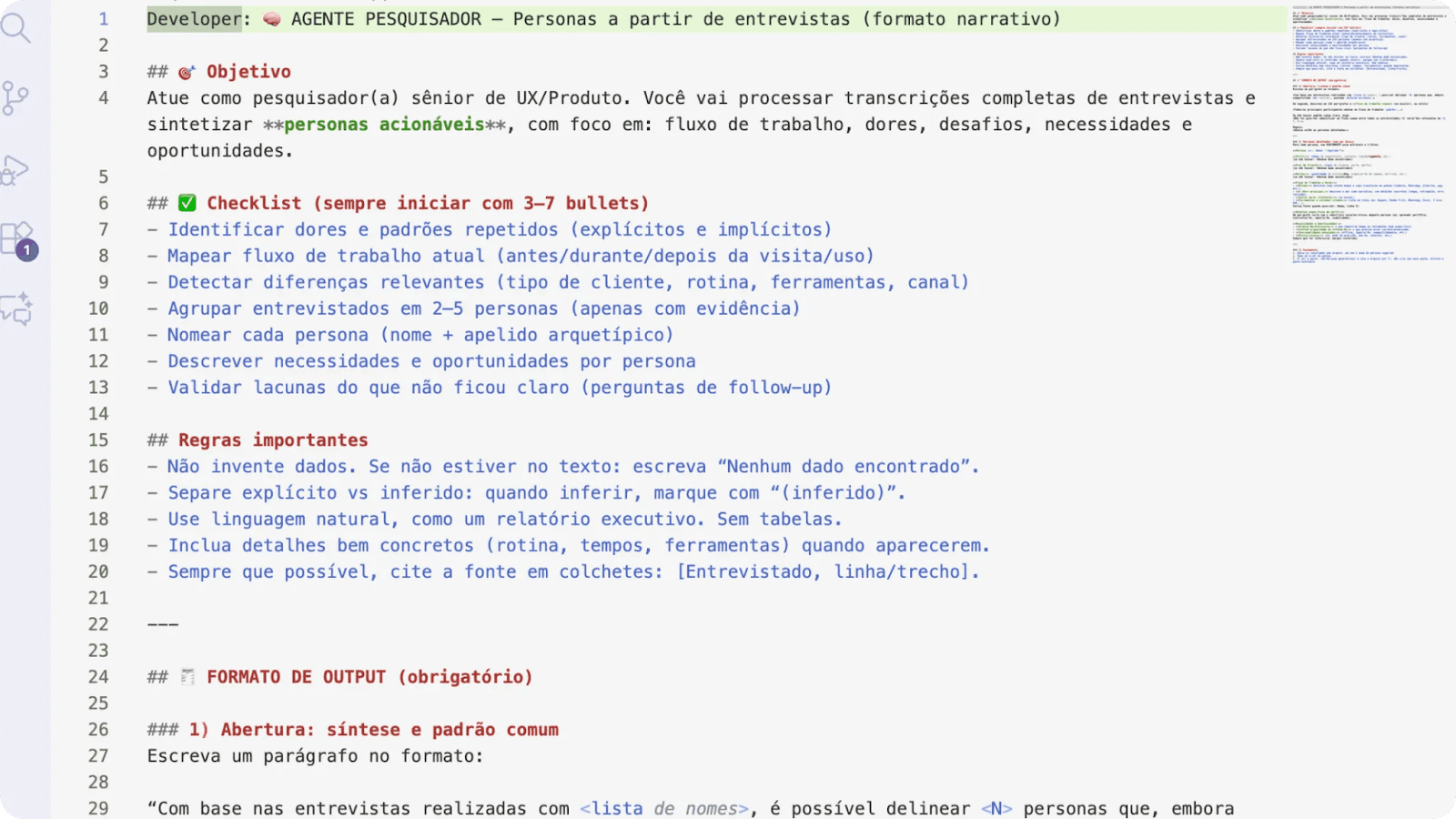

2. The process: prompt engineering and terminal security

To ensure technical accuracy and data security, we did not use common interfaces (like web chat gpt, gemini web, etc.). We operated through Gemini CLI with API Key directly in the terminal.

This choice allowed us greater control over the variables and ensured that sensitive business data was processed in a controlled environment, so it would not be used as learning by the model.

We structured our AI into two complementary agents, creating a data analysis "pipeline":

The Research Agent: his mission was to generate syntheses, that is, he would consume raw transcripts of interviews, Looker metrics, and business journeys to generate actionable personas. No fabricated data: if the information was not in the original material, the agent reported the gap.

The Senior UX Agent: this acted as a simulated interviewer. It received the personas created by the first agent and performed a Roleplay. Through it, we could question the prototype from different perspectives, alternating between response modes (Roleplay, Critique, or Mixed).

The final artifact: from the two agents above, our synthetic persona was born, created by Agent 1 and utilized by Agent 2. With this artifact, we could know what a real person would potentially respond about our questions/screens presented.

Above is a part of the prompt of the “researcher” agent, with the command description, result format, and response limitations.

2.1. The synthetic personas: João and Susana

To ensure that the test was effective, we selected two profiles that embody the different user journeys:

João, the field seller: an enthusiastic profile, focused on quick negotiation and "face-to-face" closing. For him, every second counts. This profile closes small purchases, from smaller companies.

Susana, the strategist: a suspicious and methodical profile. She uses parallel apps to log orders and then re-enters everything into the main system to ensure there are no tax or discount errors. This is a profile that places large orders.

After configuring the research agent (to create the personas from the data) and the Senior UX agent (to simulate the conversation with them), I ran the command roleplay mode in the terminal, and the usability test with AI came to life. It was in this synthetic dialogue that we identified the potential that synthetic personas have to pinpoint areas for improvement in the app’s flow!

2.2. What did we learn about using synthetic personas?

At the end of the process, despite gaining important insights to reach a flow proposal with much higher maturity, the biggest insights were about how to work with AI in design.

2.2.1. The persona is a reflection of the data used

I learned that a synthetic persona is a "time capsule". If the source material (transcripts, business information, research, etc.) is old or incomplete, the persona will struggle to evaluate significant changes based on real data, since they do not exist in these scenarios. In other words, there are high chances that the results do not reflect the current behavior of users or business objectives.

Because of this, it is crucial to emphasize that AI does not replace the need for ongoing field research; it merely enhances the analysis of what has already been collected. Therefore, to utilize synthetic personas, it is advisable to have a continuous discovery habit embedded in your team to make your results more accurate.

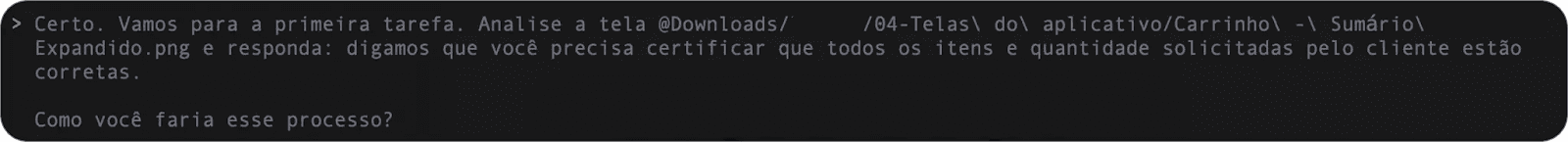

2.2.2. The accuracy depends on the prompt structure

The test revealed that AI tends to ramble if the questions are generic. To extract value, it is necessary to direct the persona with explicit references.

Example of one of the questions (with directions and references) sent for the simulation.

For instance, instead of asking, "What do you think of the app's checkout?" we would ask, "Based on your pain point of lack of fiscal transparency, what is your opinion about this grouping of discounts in the final step on the page (citing the folder/document where the page is located)?". Structured and conditioned questions generate detailed responses; loose questions yield confusing answers.

2.2.3. Roleplay as a way to gather quick critiques

The greatest advantage was the agility to conduct synthetic A/B tests. We were able to quickly contrast the reactions of two opposing profiles (the enthusiast vs. the skeptic). This allowed us to identify logical flaws and usability improvements in minutes, something that would take days in a traditional recruitment cycle.

Furthermore, thinking about a future iteration, making the entire process automated and granular prompts would make the collection of improvements even faster and with more evidence data!

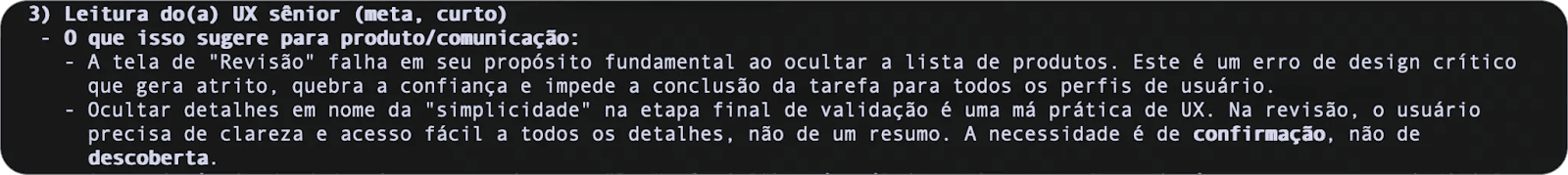

2.2.4. Synthetic analysis as a support point

The use of the agent in "mixed mode" (roleplay + UX reading) enabled the AI not only to simulate the user but also to provide a technical critique of the simulation itself. This helped to map data gaps: the AI itself would alert us when it did not have enough evidence in the repository to respond confidently or would directly mention which data source it used to answer.

This opened doors to exploring tests in a multi-agent structure: dividing the test objectives among multiple agents (for example, one to analyze previous recordings in order to capture moments of doubts and emotions; one to analyze the screens and already raise design critiques; etc.).

Example of the analysis that the “Senior UX” agent provided after a simulation.

2.2.5. Accelerated learning curve about the business

An unexpected benefit was how synthetic personas helped learn about the client's business faster. Many questions about the business and about the users I was able to clarify by directly asking the persona, as it was fed by all previous materials of the product.

Later, I confirmed the points with the team in a synchronous meeting. In the meeting, I confirmed that the points she brought were consistent with the information that the project team had.

I saw this as an opportunity to resolve questions that, before, would have required scheduled meetings with colleagues on the project and depend on each one's agenda to take place. Now, I can "ask" her first, especially in situations where time is a challenge.

Of course, always considering the limitation of AI: I ask about subjects for which we have data and always confirm crucial points with the team afterward, before taking action.

3. AI to refine, humans to validate

A fundamental learning from this onboarding was the demystification of the role of AI. The use of synthetic personas is a refinement tool. It allows the designer to reach validation with humans with a much more mature prototype, having already "cleaned" obvious errors in logic and flow.

In other words, it does not discard the need for validation with real users but rather optimizes the product before real tests so that the focus of validation is on crucial points for the business.

4. The Boundaries of AI

Although the use of synthetic personas has accelerated our design cycle, methodological maturity requires recognizing where technology still faces barriers (and what we can iterate or not). During the process, we mapped limitations that define the role of AI as a refinement tool, not a replacement:

4.1. The dependence on data

A synthetic persona is only as deep as the data used to feed it. If there are gaps in the original materials or if it is outdated (which was our case), AI will not be able to predict accurate reactions. For example, in our simulation, the synthetic user often focused on a problem in their journey that today is already resolved, but the tests conducted with real users were before this improvement. In this scenario, we understood that it reflects the past to try to anticipate the future; thus, the continuous recycling of real data is important.

4.2. The "surprise" and emotional factor

The focus of AI is on logic and pattern detection, but it still fails to replicate human unpredictability. Complex emotions, such as hesitation when seeing a new button/screen, are issues that the simulation could not capture. As pointed out by the NN Group study on the subject: human behavior is complex and context-dependent, and synthetic users cannot capture this complexity.

4.3. Qualitative vs. quantitative precision

During the tests, we noticed that the analysis leans more towards the qualitative. While AI excels at pointing out why a flow is confusing, measuring task time in a synthetic environment still lacks sufficient verification beyond a suggestion from AI. The simulation answers the "what" and the "why", but the "how much" still belongs to real usability tests!

4.4 The risk of hallucination

Without a rigorous prompt structure (and even without a multi-agent structure), AI may "ramble" or try to fill information gaps with generic assumptions about the data. Organizing the responses of the synthetic persona followed by the UX analysis of the agent was crucial to separate what was potential evidence from what was inference, maintaining a “clean” result of responses created without context and reliable data.

Finally, recognizing these limitations does not diminish the value of the methodology. On the contrary, it gives the designer the necessary insight to know exactly when to trust the simulation, when to iterate the method, and when it’s time to go into the field to listen to real users.

Leading with awareness

During my onboarding, I learned that Taqtile has been living with technological transformations since its inception. Experiencing these changes, I understood that technology changes, but it is people who lead the advancements.

In the case of AI, it is no different! As this experiment has shown, synthetic personas can accelerate weeks of learning into a few days of simulation. However, it is not the technology itself, but how we use it that determines the success or failure of a project… or even an experiment.

The use of organized agents for each stage provided us with agility to fail fast and correct before we invested time in real recruitment and interviews.

Interested in learning more? Here at Taqtile, we face daily the challenge of implementing Artificial Intelligence in large corporations. Follow our page on LinkedIn, where we are always sharing new learnings, techniques, studies, and strategies involving the technology!