Beyond compliance: the strategic value of responsibility in AI adoption

A report on Taqtile's experiences and lessons on how this complex and sensitive topic can be better addressed in corporate environments.

Jan 26, 2026

In the race for the adoption of Artificial Intelligence, it is common to see the ethical issue only as a set of restrictions or compliance barriers. Here at Taqtile, we have a different view: we believe that ethical responsibility is not a brake, but a competitive advantage capable of protecting the brand's reputation and generating new opportunities for value.

We know that the topic is complex and, often, difficult to materialize in the day-to-day of projects. However, ignoring this layer is not an option. Ethical care is, ultimately, a care for the longevity of the business. Even if regulatory and social demands are still maturing, brands that anticipate build much stronger foundations to scale their innovations.

In this article, Nicolás de Arriba shares practical learnings from dialogues with Tuanny Martins and Danilo Toledo, who lead these processes here at Taqtile. Below, you will find strategies to take the subject out of the theoretical realm and integrate it into innovation processes incrementally, facing ethics as a constant learning that evolves along with technology.

Responsibility and Artificial Intelligence: how to deal with ethical challenges in technology adoption

By Nicolás de Arriba

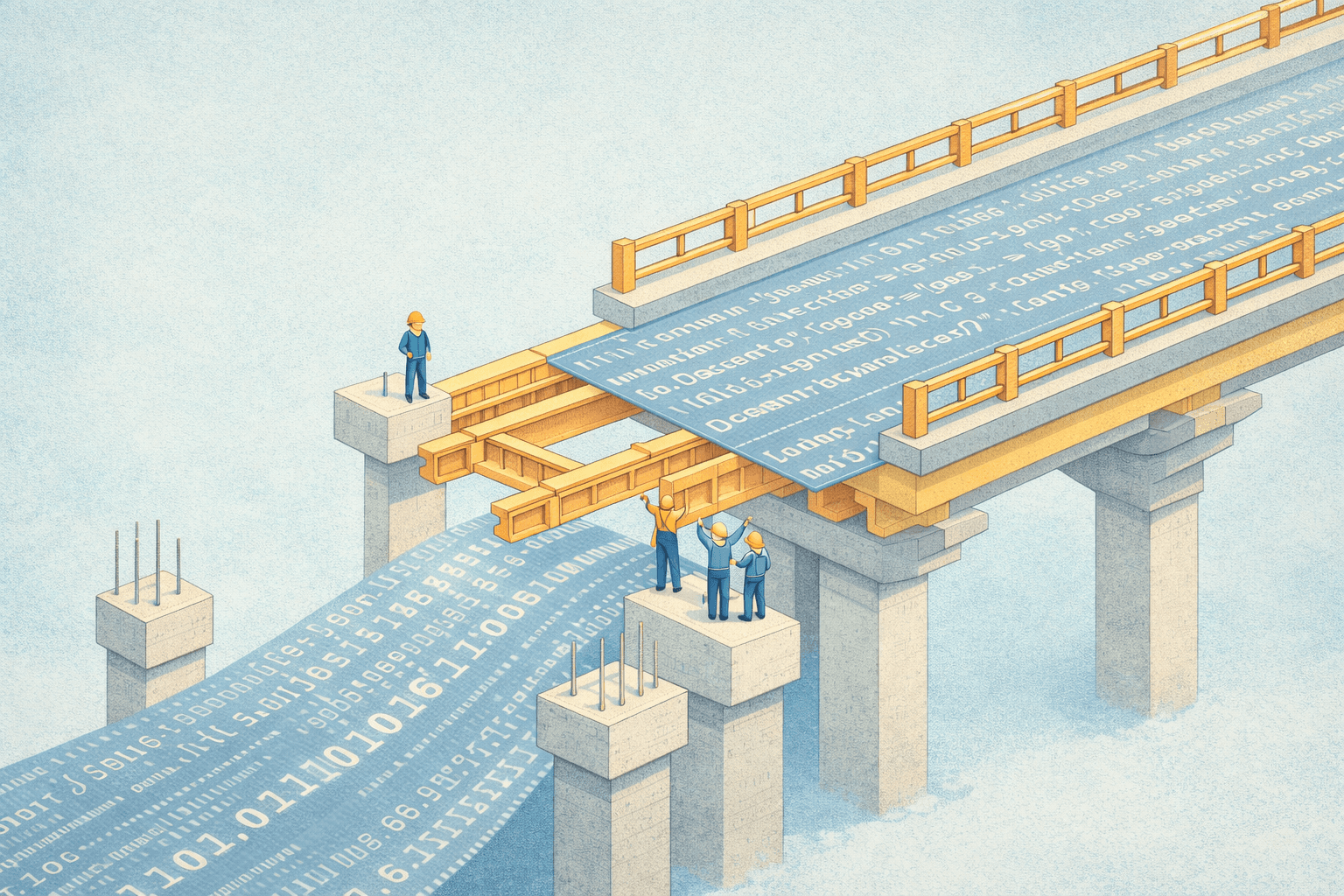

Concept/Prompt: Nicolás de Arriba· Image: DALL-E 3

A common tension in innovation and Artificial Intelligence projects is that ethical challenges, due to their vastness and complexity, risk becoming a "white elephant." It is known that it is there, taking up space, but the difficulty of addressing all its ramifications often leads to postponement or even forgetting the problem.

And it partly makes sense, because any innovation project has many barriers, and a barrier of this magnitude can be decisive for the continuity of a project. This leads to one of Taqtile's main learnings regarding the ethical challenge: the complexity of the topic should not overshadow the size of the initiative being discussed. It needs to grow as the project progresses.

As will be reproduced below from conversations with Tuanny Martins and Danilo Toledo who lead these discussions at Taqtile, between pausing a project or avoiding the subject, the way is to work on it incrementally.

Therefore, the goal of this text is to share what we have learned at Taqtile about how to avoid letting the topic be sidelined. The key is not to have all the answers and commitments on day one, but to transform ethics into a manageable process, which is addressed and matures at the same pace as the project advances.

The importance of transforming it into a subject

Tracking the development of this topic reminded me of when I became acquainted with the research of Sheila Jasanoff, an American social scientist who is a reference in the field of Science, Technology and Society (STS) studies. Studying the impact of new technologies on society, she became known for highlighting that there is a gap in how we think about society: disregarding how technologies affect the production of social orders.

In her studies, Jasanoff presents the concept of sociotechnical imaginary, which refers to the collectively shared and publicly performed visions of desirable futures as something that is achievable through advances in science and technology. In this scenario, the author shows, we tend to look at innovation only from the perspective of the solution, disregarding how it affects and produces new social orders.

In this sense, the author exposes that the greatest risk arises not from the technology itself, but primarily when aspects of responsibility and ethical challenges are not part of these sociotechnical imaginaries of technology.

Not by coincidence, this is one of the central concerns at Taqtile: beyond seeking notions of compliance, studying the subject aims to make it something manageable and possible to be addressed in the corporate environment. To share what we have already learned, based on internal discussions, we have consolidated three strategies to bring the discussion into the corporate environment.

1. The topic must accompany the evolution of the project

How, then, to bring the subject to practice without stalling innovation? The first strategy suggested by Danilo is the incremental approach.

"Ethics cannot be an afterthought; it needs to be present from the starting point and evolve in complexity with the project." Therefore, the role of consulting is to raise awareness about broad risks from the outset, but to address tensions proportionally to the stage of development. This prevents ethics from becoming a sensitive and insurmountable block, allowing it to be addressed step by step, tracking the increase in complexity and impact of the solution.

2. Approach through the notion of efficiency: the cost of not doing

Tuanny reminds us that there is also a pragmatic dimension to address. Treating ethics from the outset is a matter of efficiency.

For example, the cost of remedying a poorly constructed AI model is exponentially greater than developing it with responsibility criteria. "AI models learn and amplify patterns. If the bias in the data is not addressed at the outset, it crystallizes in the system's architecture. Correcting this later may require everything from a costly retraining to the total discarding of the model. Moreover, technical aspects such as explainability—the system's ability to detail how it reached a conclusion—often cannot be 'fitted in' later.”

Not only that, in the long run, thinking about compliance and responsibility from "day zero" is about protecting the investment, especially since this is a market that should be increasingly regulated. Addressing the ethical aspect of technology helps to anticipate where this regulation should happen beforehand, allowing companies to get ahead.

3. Ethics is also about generating value

Something that Tuanny highlights is that “it is essential to change the perspective: moving from a defensive stance ("blocking AI to prevent harm") to a proactive stance. Ethics is not only for mitigating risks; it generates opportunities for value.

A practical example brought by her illustrates this point: “when developing an internal prototype, we realized that the AI used predominantly male language and examples, reflecting the bias of its training data. By identifying this, it was possible to adjust the model to adopt neutral and inclusive language with a precision and scale that would be difficult to achieve manually. Here, the ethical perspective was not a brake, but a lever to deliver a technically superior and socially more appropriate product.”

Therefore, looking at the proactive side that Artificial Intelligence can deliver is not just about dealing with desirable (and distant) futures, but also understanding how the capabilities and skills of technology can solve problems today.

How Taqtile is deepening its approach to the challenge

At Taqtile, just like in the market, the complexity of the topic is still being mapped and elaborated. By building their own tools, the focus is not only to raise awareness about the topic but also to bring it to the practical reality of companies and projects.

In this sense, at Taqtile, a working group is finalizing a checklist for assessment in Responsible AI. Designed to evaluate specific vulnerabilities, the proposal is to be a structured way to assess and implement ethical practices in Artificial Intelligence projects. Additionally, in the proprietary technology acceleration methodology, the AI Sprint, there are dedicated steps to raise awareness among companies regarding the topic and map risks in the context of the project.

Still, recognizing the distance between the complexity of the topic and the ongoing initiatives, Taqtile reflects: dealing with complexity incrementally and not avoiding or sidelining challenges.

Where does responsibility start and end?

In conclusion, I would like to revisit another author I studied throughout my education. The anthropologist Dinah Rajak, in her book In Good Company (2011), provides an anatomy of the notion of Corporate Social Responsibility (CSR). Studying not only how a modern phenomenon, she shows that the notion of responsibility is part of the very history of the formation of corporations.

As the author writes, although the idea of Corporate Social Responsibility is "frequently presented as a distinctly modern phenomenon, a product of millennial concerns about social and ecological sustainability in an era of globalization" (Rajak, 2011, p. 10, my translation), it emerges much earlier in the course of corporate history.

With this, Rajak proposes that establishing in advance how the boundaries between the role and responsibility of companies concerning social aspects are defined, especially how this role is or should be exercised, does not fit into a pragmatic perspective on the subject. Furthermore, it ignores that the notion of responsibility can be observed since the philanthropic industrialists of Victorian Britain in the 18th century, such as Rowntree, Cadbury, or Lever and their self-proclaimed commitment to social improvement (Sartre, 2005; Rajak, 2011).

In other words, even if it is possible to establish clear limits of responsibility concerning technology adoption and its impacts, for the author, this is something that not only can but should always be reconsidered. Therefore, beyond raising awareness about potential negative impacts in technology adoption processes, it is necessary to find ways to include it in the sociotechnical imaginary itself, and not as a topic that belongs to another sphere other than that of the companies themselves. The risks involving new technology also belong to the companies.

While the strategies raised in this discussion have shown interesting results, we recognize that there is still a long way to go. To learn more about how Taqtile is implementing technology in large companies, follow our page and keep track of experiences, learnings, and cases developed by the company's own team.