We are investing wrongly: the real problem of adopting generative AI.

Feb 2, 2026

Concept/Prompt: Danilo Toledo · Image: Midjourney

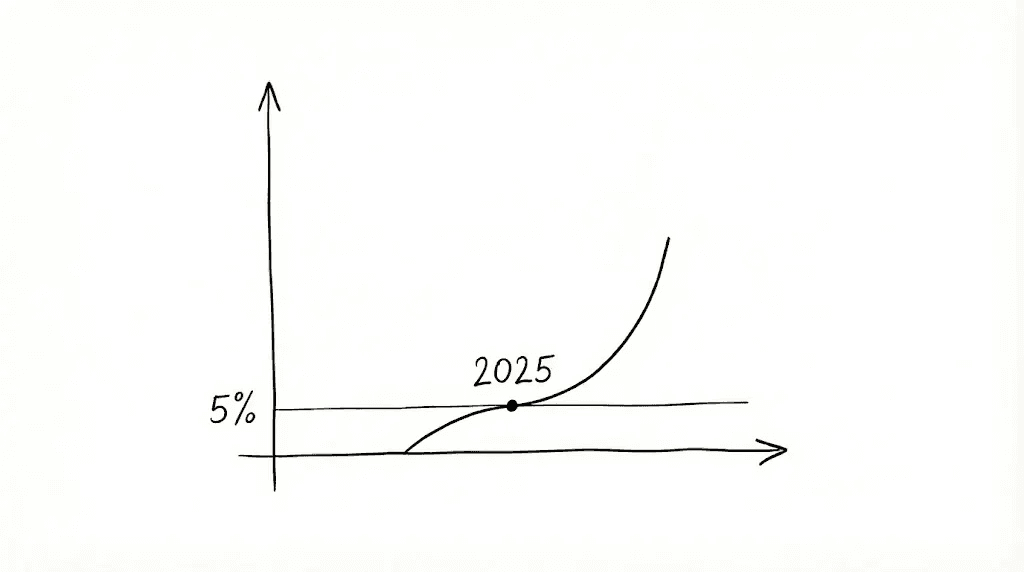

I don't remember any other research making as much noise this year as the project MIT's NANDA, The GenAI Divide. The anti-AI pessimists wasted no time. They republished the headline everywhere: '95% of GenAI pilots in enterprises had zero return in 2025.'

For those of us who remain in the room — those who understand that Generative AI in enterprises is a one-way street — the mission is much more pragmatic: ignore the noise and focus on the signal. What replicable patterns do the 5% of successful cases have in common?

Among MIT's conclusions, three points resonated most strongly with what we have seen at Taqtile. They were hard-earned lessons, perhaps avoidable if we had paid closer attention to past waves of innovation. In any case, 2025 marked a natural turning point, and it is time to share what we learned so that we can all move forward stronger.

Outlined below, the three main points are:

GenAI MVPs need to be even smaller than we expected

Treat AI agents like new hires. And set promotion criteria

Understand where GenAI excels (and where it struggles)

1. GenAI MVPs need to be even smaller than we expected

We have become accustomed to building MVPs focused on digital transformation. Start small, validate, scale. But what surprised us is that GenAI requires starting points even smaller than traditional digital applications — and this is due to two contradictory reasons:

Being a more flexible and adaptable technology, delivering value with Generative AI can happen much faster.

At the same time, being less predictable, the potential for failure multiplies just as quickly.

Teams that are generating real value with AI agents are not trying to automate complete and complex processes from day one. These projects tend to drag on — stuck in endless case management of exceptions (edge-cases).

The projects that truly deliver results — like the 5% from MIT's study — start with something so focused that it becomes embarrassing to justify. And that’s exactly where the real work begins: educating and aligning expectations. It's harder than it looks to manage stakeholders who, dazzled by the potential of GenAI, expect the technology to solve fundamentally different problems all at once.

Using sales as an example: building an autonomous agent that manages the entire pipeline, from the first contact to closing, is a challenge that — although possible — will likely trap your team in an endless cycle of tweaking exceptions. It will take much longer to generate results than an agent that simply qualifies and warms up leads. Less sexy, of course, but much smarter to start with an AI that identifies who is showing buying signals and passes those prospects — prepared and ready — to your human team. Your sellers stop wasting hours on leads that won't convert. They focus on closing. The value appears immediately.

MIT's study confirms this pattern: solutions focused on "small but critical workflows" achieve $1.2 million in annualized value within 6 to 12 months. These projects typically take 90 days from pilot to full implementation. And how about tackling broad and complex processes? Nine months or more — often with nothing to show.

Start smaller than feels comfortable. Prove value. Expand from the results.

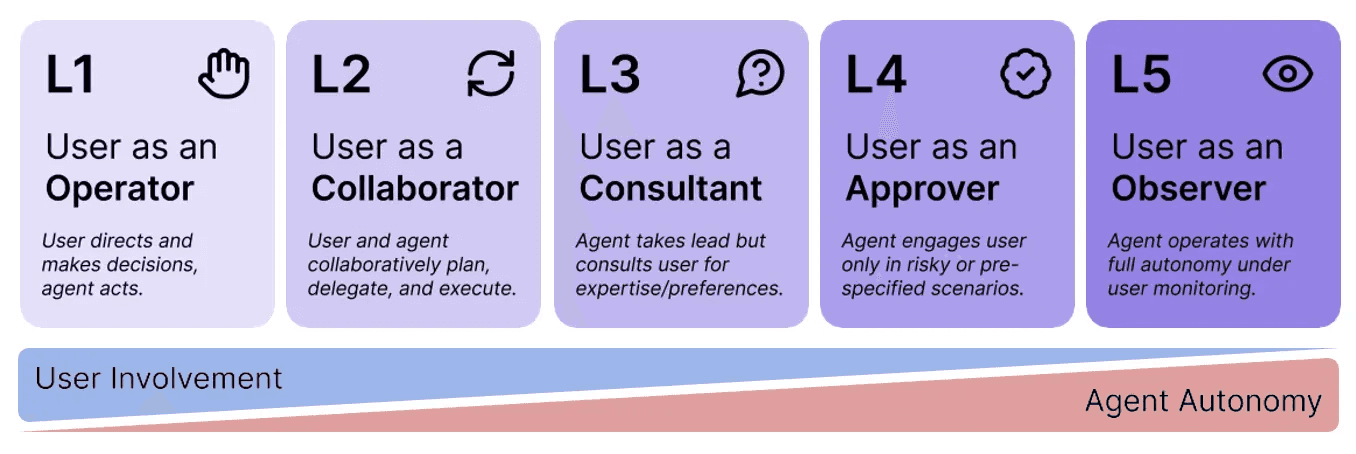

AI Agents Guided Autonomy. Concept/Prompt: Danilo Toledo Image: Midjourney + Gemini

2. Treat AI agents like new hires. And set promotion criteria

"Your best collaborators did not arrive on the first day with total autonomy. They earned it.

The same principle applies to AI agents. However, most companies grant too much autonomy, too quickly, and watch the agents fail. The opposite is equally risky: overly restricted agents, waiting for perfection, may never deliver value. It's a stagnation problem.

An approach proposed by researchers at the University of Washington — Levels of Autonomy for AI Agents (Feng, McDonald & Zhang, 2025) — has been crucial in Taqtile's projects. It is a framework of increasing levels of autonomy that describes five roles that the user can take when working side by side with an agent: operator, collaborator, consultant, approver, and observer. The key point: the agent does not skip steps. It is promoted as it delivers results.

Levels of Autonomy for AI Agents. Fen, et al. Preprint 2025

Continuing with the sales workflow example: in practice, this means starting with agents that accompany your sellers. These AI co-pilots work side by side with your team, drafting messages for human review, suggesting next actions, and learning from corrections. They are in training.

As they prove their value — as the saved time increases, the message editing rate decreases, and the team's confidence grows — you promote them. They begin to handle real interactions with clients, initially under supervision, and then with increasing independence.

The secret lies in clear operational metrics from day zero. Not subjective metrics about model accuracy — business metrics.

Time saved per seller.

Editing rate of AI-generated messages.

Conversion rate of leads qualified by AI.

These metrics indicate when an agent is ready to take on more responsibilities.

Agents that are focused from the start gain the team's trust more quickly. There are fewer exceptions to adjust, fewer failures that undermine confidence. More than half of the leaders interviewed by MIT cited tools that "fail in exception cases and do not adapt" as the main reason their pilots never went into production.

3. Understand where GenAI excels (and where it struggles)

The first wave of GenAI applications created an illusion: that everything could be solved with prompt engineering and augmented retrieval tricks (read: RAG). Reality has shown that there is no silver bullet: purely generative systems tackling complex workflows have repeatedly failed.

And here’s something we see constantly: every workflow you think is simple reveals surprising complexity when you try to automate it — especially in high-volume contexts, such as customer-facing applications.

What the 5% from MIT also seem to understand: successful projects resemble traditional software applications more than the magic of Generative AI.

What we have seen here is that the winning architecture is not pure GenAI. It is hybrid — deterministic flows to ensure reliability combined with generative AI where it truly excels. GenAI is remarkable in understanding and generating natural language, dealing with ambiguity, and adapting to context. But business logic? Predictable rules? Exception handling? Traditional engineering still prevails.

In our projects at Taqtile, the best results come from this combination. Specialized agents with controlled autonomy better manage context, adhere to business rules more accurately, and scale with much greater reliability than systems that try to solve everything solely with GenAI.

The recipe for success in production is simple, but vital: build a solid foundation of deterministic flows and use generative AI in a targeted way, only where it performs well.

From noise to signal: what it takes

The journey to escape cycles that lead to strategic paralysis and wasted investments, towards the profitable integration of Generative AI, requires embracing a pragmatic engineering mindset:

Extreme Focus: start ultra-small and tackle critical workflows, proving immediate value in 90 days, instead of chasing full automation.

Governance: grant guided autonomy, treating AI agents like new hires who need to earn trust and responsibility based on clear business metrics, not on subjective model accuracy.

Architecture: Prioritize the hybrid model, using deterministic flows to ensure reliability and reserving GenAI only for where it truly excels (language and ambiguity).

This is the true difference between noise and signal, and this is the turning point of 2025. It’s time to leave behind the expectation of the "silver bullet" and build incrementally and with discipline.

The lessons from AI Agents in enterprises: 2025 as a turning point. Concept/Prompt: Danilo Toledo Image: Gemini.

The risk scale of not carrying these lessons into 2026

The risk of not putting these lessons into practice now is tangible, and MIT's research points to a worrying trend.

In the coming quarters, many companies will get stuck in commercial contracts with solutions that, although impressive, will not solve their real problems.

The scenario is predictable. We've seen this movie before. Sellers from major tech platforms, pressured to show returns on investments made to force AI into their products, will come sharpened, armed with impressive demos. But that do not integrate with your real workflows.

And the companies that do not replicate the patterns of the 5% highlighted by MIT will discover — too late — that these systems fail in most of their business-specific use cases. They will lose money, time, and the peace of mind of their decision-makers.

The shortcut validated by MIT: The right approach

At the same time, research identifies an interesting shortcut for acceleration: approach, not technology.

Organizations that stand out establish partnerships with external experts who help them understand their own workflows first, and only then adapt AI solutions that integrate into existing workflows — and not the other way around.

The data supports this: projects executed with external partners have 2x the chance of success and reach production 3x faster.

Why do external partners accelerate results? Well, we're biased, but we see at least three decisive reasons:

Genuine Multidisciplinarity: teams that combine engineering, design, strategy, and research from Day 1 — not siloed departments meeting in status meetings.

Recognition of Cross-Sector Patterns: partners who have worked on dozens of implementations know which approaches work in which contexts. They have already gone through the mistakes that internal teams are about to make.

Continuous Updates: teams dedicated to researching GenAI, tracking models, frameworks, and best practices daily, without competing with their internal priorities.

The answer we found at Taqtile: AI Sprint

MIT's research indicates that it is not technology that separates success from failure. The combination of profiles, methodologies, and capabilities needed to develop successful GenAI initiatives tells us that it is not merely a matter of tool selection — it’s a matter of approach.

The answer we found at Taqtile lies in rethinking widely validated methodologies. We adapted the Design Sprint — a proven innovation framework in the era of digital transformation — for the challenges of GenAI. The result is our AI Sprint. This approach, being ultra-focused, hybrid, and built on controlled autonomy, ensures that AI agents are destined to deliver value from Day 1.

Ultimately, GenAI is a journey of continuous learning, and the knowledge of those building it is our most valuable asset. If you are one of those who stay in the room, navigating the challenges of implementing GenAI in your organization, we would love to continue this conversation.

If you want to learn more about how we apply these principles in practice, check out our AI Sprint framework.